Brownstone Institute

The Economic Disaster of the Pandemic Response

This article originally published by the Brownstone Institute

BY

On April 15, 2020, a full month after the President’s fateful news conference that greenlighted lockdowns to be enacted by the states for “15 Days to Flatten the Curve,” Donald Trump had a revealing White House conversation with Anthony Fauci, the head of the National institute for Allergy and Infectious Disease who had already become the public face of the Covid response.

“I’m not going to preside over the funeral of the greatest country in the world,” Trump wisely said, as reported in Jared Kushner’s book Breaking History. Two weeks of lockdown was over and the promised Easter opening blew right by too, Trump was done. He also suspected that he had been misled and was no longer speaking to coronavirus coordinator Deborah Birx.

“I understand,” Fauci responded meekly. “I just do medical advice. I don’t think about things like the economy and the secondary impacts. I’m just an infectious diseases doctor. Your job as president is to take everything else into consideration.”

That conversation both reflected and entrenched the tone of the debate over the lockdowns and vaccine mandates and eventually the national crisis that they precipitated. In these debates in the early days, and even today, the idea of “the economy” – viewed as mechanistic, money-centered, mostly about the stock market, and detached from anything truly important – was pitted against public health and lives.

You choose one or the other. You cannot have both. Or so they said.

Pandemic Practice

Also in those days, it was widely believed, stemming from a strange ideology hatched 16 years earlier, that the best approach to pandemics was to institute massive human coercion like we have never before experienced. The theory was that if you make humans behave like non-player characters in computer models, you can keep them from infecting each other until a vaccine arrives which will eventually wipe out the pathogen.

The new lockdown theory stood in contrast to a century of pandemic advice and practice from public-health wisdom. Only a few cities tried coercion and quarantine to deal with the 1918 pandemic, mostly San Francisco (also the home of the first Anti-Mask League) whereas most just treated disease person by person. The quarantines of that period failed and so landed in disrepute. They were not tried again in the disease scares (some real, some exaggerated) of 1929, 1940-44, 1957-58, 1967-68, 2003, 2005, or 2009. In those days, even the national media urged calm and therapeutics during each infectious-disease scare.

Somehow and for reasons that should be discussed – it could be intellectual error, political priorities, or some combination – 2020 became the year of an experiment without precedent, not only in the US but all over the world with the exception of perhaps five nations among which we can include the state of South Dakota. The sick and the well were quarantined, along with stay-at-home orders, domestic capacity limits, and business, school, and church shutdowns,

Nothing turned out according to plan. The economy can be turned off using coercion but the resulting trauma is so great that turning it on again is not so easy. Instead, thirty months later, we face an economic crisis without precedent in our lifetimes, the longest period of declining real income in the post-war period, a health and educational crisis, an exploding national debt plus inflation at a 40-year high, continued and seemingly random shortages, dysfunction in labor markets that defies all models, the breakdown of international trade, a collapse in consumer confidence not seen since we have these numbers, and a dangerous level of political division.

And what happened to Covid? It came anyway, just as many epidemiologists predicted it would. The stratified impact of medically significant outcomes was also predictable based on what we knew from February: the at-risk population was largely the elderly and infirmed. To be sure, most everyone would eventually met the pathogen with varying degrees of severity: some people shook it off in a couple of days, others suffered for weeks, and others perished. Even now, there is grave uncertainty about the data and causality due to the probability of misattribution due to both faulty PCR testing and financial incentives given out to hospitals.

Tradeoffs

Even if lockdowns had saved lives over the long term– the literature on this overwhelming suggests that the answer is no – the proper question to have asked was: at what cost? The economic question was: what are the tradeoffs? But because economics as such was shelved for the emergency, the question was not raised by policy makers. Thus did the White House on March 16, 2020, send out the most dreaded sentence pertaining to economics that one can imagine: “bars, restaurants, food courts, gyms, and other indoor and outdoor venues where groups of people congregate should be closed.”

The results are legion. The lockdowns kicked off a whole range of other policy disastrous decisions, among which an epic bout of government spending. What we are left with is a national debt that is 121% of GDP. This compares to 35% of GDP in 1981 when Ronald Reagan correctly declared it a crisis. Government spending in the Covid response amounted to at least $6 trillion above normal operations, creating debt that the Federal Reserve purchased with newly created money nearly dollar for dollar.

Money Printing

From February May 2020, M2 increased by an average of $814.3 billion per month. On May 18, 2020, M2 was rising 22% year over year, compared with only 6.7% from March that year. It was not yet the peak. That came after the new year, when on February 22, 2021, the M2 annual rate of increase reached a staggering 27.5%.

At the very same time, the velocity of money behaved as one would expect in a crisis of this sort. It plummeted an incredible 23.4% in the second quarter. A crashing rate at which money is being spent puts deflationary pressure on prices regardless of what happens with money supply. In this case, the falling velocity was a temporary salvation. It pushed the bad effects of this quantitative easing – to invoke a euphemism from 2008 – off into the future.

That future is now. The eventual result is the highest inflation in 40 years, which is not slowing down but accelerating, at least according to the October 12, 2022 Producer Price Index, which is hotter than it has been in months. It is running ahead of the Consumer Price Index, which is a reversal from earlier in the lockdown period. This new pressure on producers heavily impacted the business environment and created recessionary conditions.

A Global Problem

Moreover, this was not just a US problem. Most nations in the world followed the same lockdown strategy while attempting to substitute spending and printing for real economic activity. The cause-and-effect relationship holds the world over. Central banks coordinated and their societies all suffered.

The Fed is being called up daily to step up its lending to foreign central banks through the discount window for emergency loans. It is now at the highest level since Spring 2020 lockdowns. The Fed lent $6.5 billion to two foreign central banks in one week in October 2022. The numbers are truly scary and foreshadow a possible international financial crisis.

The Great Head Fake

But back in the spring and summer of 2020, we seemed to experience a miracle. Governments around the country had crushed social functioning and free enterprise and yet real income went soaring. Between February 2020 and March 2021, real personal income during a time with low inflation was up by $4.2 trillion. It felt like magic: a lockdown economy but riches were pouring in.

And what did people do with their new-found riches? There was Amazon. There was Netflix. There was the need for all sorts of new equipment to feed our new existence as digital everything. All these companies benefited enormously while others suffered. Even so we paid off credit card debt. And much of the stimulus was socked away as savings. The first stimulus went straight to the bank: the personal savings rate went from 9.6% to 33% in the course of just one month.

After the summer, people started to get the hang of getting free money from government dropped into their bank accounts. The savings rate started to fall: by November 2020, it was back down to 13.3%. Once Joseph Biden came to power and unleashed another round of stimulus, the savings rate went back up to 26.3%. And just fast forward to the present and we find people saving 3.5% of income, which is half the historical norm dating back to 1960 and about where it was in 2005 when low interest rates fed the housing boom that went bust in 2008. Meanwhile credit card debt is now soaring, even though interest rates are 17% and higher.

In other words, we experienced the wildest swing from shocking riches to rags in a very short period of time. The curves all inverted once the inflation came along to eat out the value of the stimulus. All that free money turned out not to be free at all but rather very expensive. The dollar of January 2020 is now worth only $0.87, which is to say that the stimulus spending covered by Federal Reserve printing stole $0.13 of every dollar in the course of only 2.5 years.

It was one of the biggest head fakes in the history of modern economics. The pandemic planners created paper prosperity to cover up for the grim reality all around. But it did not and could not last.

Right on schedule, the value of the currency began to tumble. Between January 2021 and September 2022, prices increased 13.5% across the board, while costing the average American family $728 in September alone. Even if inflation stops today, the inflation already in the bag will cost the American family $8,739 over the next 12 months, leaving less money to pay off soaring credit card debt.

Let’s return to the salad days before the inflation hit and when the Zoom class experienced delight at their new riches and their work-from-home luxuries. On Main Street, matters looked very different. I visited two medium-sized towns in New Hampshire and Texas over the course of the summer of 2020. I found nearly all businesses on Main Street boarded up, malls empty but for a few masked maintenance men, and churches silent and abandoned. There was no life at all, only despair.

The look of most of America in those days – not even Florida was yet open – was post-apocalyptic, with vast numbers of people huddled at home either alone or with immediate families, fully convinced that a universally deadly virus was lurking outdoors and waiting to snatch the life of anyone foolish enough to seek exercise, sunshine, or, heaven forbid, fun with friends, much less visiting the elderly in nursing homes, which was verboten. Meanwhile, the CDC was recommending that any “essential business” install walls of plexiglass and paste social distancing stickers everywhere where people would walk. All in the name of science.

I am very aware that all of this sounds utterly ridiculous now, but I assure you that it was serious at the time. Several times, I was personally screamed at for walking only a few feet into a grocery aisle that had been designated by stickers to be one way in the other direction. Also in those days, at least in the Northeast, enforcers among the citizenry would fly drones around the city and countryside looking for house parties, weddings, or funerals, and snap images to send to the local media, which would dutifully report the supposed scandal.

These were times when people insisted on riding elevators alone, and only one person at a time was permitted to walk through narrow corridors. Parents masked up their kids even though the kids were at near zero risk, which we knew from data but not from public health authorities. Incredibly, nearly all schools were closed, thus forcing parents out of the office back home. Homeschooling, which has long existed under a legal fog, suddenly became mandatory.

Just to illustrate how crazy it all became, a friend of mine arrived home from a visit out of town and his mother demanded that he leave his Covid-infested bags on the porch for three days. I’m sure you have your own stories of absurdity, among which was the masking of everyone, the enforcement of which went from stern to ferocious as time passed.

But these were the days when people believed the virus was outdoors and so we should stay in. Oddly, this changed over time when people decided that the virus was indoors and so we should be outdoors. When New York City cautiously permitted dining in commercial establishments, the mayor’s office insisted that it could only be outdoors, so many restaurants built an outdoors version of indoors, complete with plastic walls and heating units at a very high expense.

In those days, I had some time to kill waiting for a train in Hudson, New York, and went to a wine bar. I ordered a glass at the counter and the masked clerk handed it to me and pointed for me to go outside. I said I would like to drink it inside since it was freezing and miserable outside. I pointed out that there was a full dining room right there. She said I could not because of Covid.

Is this a law, I asked? She said no it is just good practice to keep people safe.

“Do you really think there is Covid in that room?” I asked.

“Yes,” she said in all seriousness.

At this point, I realized we had fully moved from government-mandated mania to a real popular delusion for the ages.

The commercial carnage for small business has yet to be thoroughly documented. At least 100,000 restaurants and stores in Manhattan alone closed, commercial real estate prices crashed, and big business moved in to scoop up bargains. Policies were decidedly disadvantageous to small businesses. If there were commercial capacity restrictions, they would kill a coffee shop but a large franchise all-you-eat buffet that holds 300 would probably be fine.

So too with industries in general: big tech including Zoom and Amazon thrived, but hotels, bars, restaurants, malls, cruise ships, theaters, and anyone without home delivery suffered terribly. The arts were devastated. In the deadly Hong Kong flu of 1968-69, we had Woodstock but this time we had nothing but YouTube, unless you opposed Covid restrictions in which case your song was deleted and your account blasted into oblivion.

Health Care Industry

To talk about the healthcare industry, let’s return to the early days of the frenzy of the Spring of 2020. An edict had gone out from the Centers for Disease Control and Prevention to all public health officials in the country that strongly urged the closing of all hospitals to everyone but non-elective surgeries and Covid patients, which turned out to exclude nearly everyone who would routinely show up for diagnostics or other normal treatments.

As a result, hospital parking lots emptied out from sea to shining sea, a most bizarre sight to see given that there was supposed to be a pandemic raging. We can see this in the data. The healthcare sector employed 16.4 million people in early 2020. By April, the entire sector lost 1.6 million employees, which is an astonishing exodus by any historical standard. Nurses in hundreds of hospitals were furloughed. Again, this happened during a pandemic.

In another strange twist that future historians will have a hard time trying to figure out, health care spending itself fell off a cliff. From March to May 2020, health care spending actually collapsed by $500 billion or 16.5%.

This created an enormous financial problem for hospitals in general, which, after all, are economic institutions too. They were bleeding money so fast that when the federal government offered subsidies of 20 percent above other respiratory ailments if the patient could be declared Covid positive, hospitals jumped at the chance and found cases galore, which the CDC was happy to accept at face value. Compliance with guidelines became the only path toward restoring profitability.

The throttling of non-Covid services included the near abolition of dentistry that went on for months from Spring through Summer. In the midst of this, I worried that I needed a root canal. I simply could not find a dentist in Massachusetts who would see me. They said every patient first needs a cleaning and thorough examination and all those have been canceled. I had the bright idea of traveling to Texas to get it done but the dentist there said they were restricted by law to make sure all patients from out of state quarantine in Texas for two weeks, time I could not afford. I thought about suggesting to my mother, who was making the appointment, that she simply lie about the date of my arrival but thought better of it given her scruples.

It was a time of great public insanity, not stopped and even fomented by public health bureaucrats. The abolition of dentistry for a time seemed to comply completely with the injunction of the New York Times on February 28, 2020. “To Take on the Coronavirus, Go Medieval on It,” the headline read. We did, even to the point of abolishing dentistry, publicly shaming the diseased on grounds that getting Covid was surely a sign of noncompliance and civic sin, and instituting a feudal system of dividing workers by essential and nonessential.

Labor Markets

Exactly how it came to be that the entire workforce came to be divided this way remains a mystery to me but the guardians of the public mind seemed not to care a whit about it. Most of the delineated lists at the time said that you could keep operating if you qualified as a media center. Thus for two years did the New York Times instruct its readers to stay home and have their groceries delivered. By whom, they did not say, nor did they care because such people are not apparently among their reader base. Essentially, the working classes were used as fodder to obtain herd immunity, and then later subjected to vaccine mandates despite superior natural immunity.

Many, as in millions, were later fired for not complying with mandates. We are told that unemployment today is very low and that many new jobs are being filled. Yes, and most of those are existing workers getting second and third jobs. Moonlighting and side gigging are now a way of life, not because it is a blast but because the bills have to be paid.

The full truth about labor markets requires that we look at the labor participation rate and the worker-population ratio. Millions have gone missing. These are working women who still cannot find child care because that industry never recovered, and so participation is back at 1988 levels. They are early retirements. They are 20 somethings who moved home and went on unemployment benefits. There are many more who have just lost the will to achieve and build a future.

The supply chain breakages need their own discussion. The March 12, 2020, evening announcement by President Trump that he would block all travel from Europe, UK, and Australia beginning in five days from then started a mad scramble to get back to the US. He also misread the teleprompter and said that the ban would also apply to goods. The White House had to correct the statement the next day but the damage was already done. Shipping came to a complete standstill.

Supply Chains and Shortages

Most economic activity stopped. By the time the fall relaxation came and manufacturers started reordering parts, they found that many factories overseas had already retooled for other kinds of demand. This particularly affected the semiconductor industry for automotive manufacturing. Overseas chip makers had already turned their attention to personal computers, cellphones, and other devices. This was the beginning of the car shortage that sent prices through the roof. This created a political demand for US-based chip production which has in turn resulted in another round of export and import controls.

These sorts of problems have affected every industry without exception. Why the paper shortage today? Because so many of the paper factories which shifted to plywood after that had gone sky-high in price to feed the housing demand created by generous stimulus checks.

We could write books listing all the economic calamities directly caused by the disastrous pandemic response. They will be with us for years, and yet even today, not many people fully grasp the relationship between our current economic hardships, and even the growing international tensions and breakdown of trade and travel, and the brutality of the pandemic response. It is all directly related.

Anthony Fauci said at the outset: “I don’t think about things like the economy and the secondary impacts.” And Melinda Gates said the same in a December 4, 2020 interview with the New York Times: “What did surprise us is we hadn’t really thought through the economic impacts.”

The wall of separation posited between “economics” and public health did not hold in theory or practice. A healthy economy is indispensable for healthy people. Shutting down economic life was a singularly bad idea for taking on a pandemic.

Conclusion

Economics is about people in their choices and institutions that enable them to thrive. Public health is about the same thing. Driving a wedge between the two surely ranks among the most catastrophic public-policy decisions of our lifetimes. Health and economics both require the nonnegotiable called freedom. May we never again experiment with its near abolition in the name of disease mitigation.

This is based on a presentation at Hillsdale College, October 20, 2022, to appear in a shortened version in IMPRIMUS

Addictions

Coffee, Nicotine, and the Politics of Acceptable Addiction

From the Brownstone Institute

By

Every morning, hundreds of millions of people perform a socially approved ritual. They line up for coffee. They joke about not being functional without caffeine. They openly acknowledge dependence and even celebrate it. No one calls this addiction degenerate. It is framed as productivity, taste, wellness—sometimes even virtue.

Now imagine the same professional discreetly using a nicotine pouch before a meeting. The reaction is very different. This is treated as a vice, something vaguely shameful, associated with weakness, poor judgment, or public health risk.

From a scientific perspective, this distinction makes little sense.

Caffeine and nicotine are both mild psychoactive stimulants. Both are plant-derived alkaloids. Both increase alertness and concentration. Both produce dependence. Neither is a carcinogen. Neither causes the diseases historically associated with smoking. Yet one has become the world’s most acceptable addiction, while the other remains morally polluted even in its safest, non-combustible forms.

This divergence has almost nothing to do with biology. It has everything to do with history, class, marketing, and a failure of modern public health to distinguish molecules from mechanisms.

Two Stimulants, One Misunderstanding

Nicotine acts on nicotinic acetylcholine receptors, mimicking a neurotransmitter the brain already uses to regulate attention and learning. At low doses, it improves focus and mood. At higher doses, it causes nausea and dizziness—self-limiting effects that discourage excess. Nicotine is not carcinogenic and does not cause lung disease.

Caffeine works differently, blocking adenosine receptors that signal fatigue. The result is wakefulness and alertness. Like nicotine, caffeine indirectly affects dopamine, which is why people rely on it daily. Like nicotine, it produces tolerance and withdrawal. Headaches, fatigue, and irritability are routine among regular users who skip their morning dose.

Pharmacologically, these substances are peers.

The major difference in health outcomes does not come from the molecules themselves but from how they have been delivered.

Combustion Was the Killer

Smoking kills because burning organic material produces thousands of toxic compounds—tar, carbon monoxide, polycyclic aromatic hydrocarbons, and other carcinogens. Nicotine is present in cigarette smoke, but it is not what causes cancer or emphysema. Combustion is.

When nicotine is delivered without combustion—through patches, gum, snus, pouches, or vaping—the toxic burden drops dramatically. This is one of the most robust findings in modern tobacco research.

And yet nicotine continues to be treated as if it were the source of smoking’s harm.

This confusion has shaped decades of policy.

How Nicotine Lost Its Reputation

For centuries, nicotine was not stigmatized. Indigenous cultures across the Americas used tobacco in religious, medicinal, and diplomatic rituals. In early modern Europe, physicians prescribed it. Pipes, cigars, and snuff were associated with contemplation and leisure.

The collapse came with industrialization.

The cigarette-rolling machine of the late 19th century transformed nicotine into a mass-market product optimized for rapid pulmonary delivery. Addiction intensified, exposure multiplied, and combustion damage accumulated invisibly for decades. When epidemiology finally linked smoking to lung cancer and heart disease in the mid-20th century, the backlash was inevitable.

But the blame was assigned crudely. Nicotine—the named psychoactive component—became the symbol of the harm, even though the damage came from smoke.

Once that association formed, it hardened into dogma.

How Caffeine Escaped

Caffeine followed a very different cultural path. Coffee and tea entered global life through institutions of respectability. Coffeehouses in the Ottoman Empire and Europe became centers of commerce and debate. Tea was woven into domestic ritual, empire, and gentility.

Crucially, caffeine was never bound to a lethal delivery system. No one inhaled burning coffee leaves. There was no delayed epidemic waiting to be discovered.

As industrial capitalism expanded, caffeine became a productivity tool. Coffee breaks were institutionalized. Tea fueled factory schedules and office routines. By the 20th century, caffeine was no longer seen as a drug at all but as a necessity of modern life.

Its downsides—dependence, sleep disruption, anxiety—were normalized or joked about. In recent decades, branding completed the transformation. Coffee became lifestyle. The stimulant disappeared behind aesthetics and identity.

The Class Divide in Addiction

The difference between caffeine and nicotine is not just historical. It is social.

Caffeine use is public, aesthetic, and professionally coded. Carrying a coffee cup signals busyness, productivity, and belonging in the middle class. Nicotine use—even in clean, low-risk forms—is discreet. It is not aestheticized. It is associated with coping rather than ambition.

Addictions favored by elites are rebranded as habits or wellness tools. Addictions associated with stress, manual labor, or marginal populations are framed as moral failings. This is why caffeine is indulgence and nicotine is degeneracy, even when the physiological effects are similar.

Where Public Health Went Wrong

Public health messaging relies on simplification. “Smoking kills” was effective and true. But over time, simplification hardened into distortion.

“Smoking kills” became “Nicotine is addictive,” which slid into “Nicotine is harmful,” and eventually into claims that there is “No safe level.” Dose, delivery, and comparative risk disappeared from the conversation.

Institutions now struggle to reverse course. Admitting that nicotine is not the primary harm agent would require acknowledging decades of misleading communication. It would require distinguishing adult use from youth use. It would require nuance.

Bureaucracies are bad at nuance.

So nicotine remains frozen at its worst historical moment: the age of the cigarette.

Why This Matters

This is not an academic debate. Millions of smokers could dramatically reduce their health risks by switching to non-combustion nicotine products. Countries that have allowed this—most notably Sweden—have seen smoking rates and tobacco-related mortality collapse. Countries that stigmatize or ban these alternatives preserve cigarette dominance.

At the same time, caffeine consumption continues to rise, including among adolescents, with little moral panic. Energy drinks are aggressively marketed. Sleep disruption and anxiety are treated as lifestyle issues, not public health emergencies.

The asymmetry is revealing.

Coffee as the Model Addiction

Caffeine succeeded culturally because it aligned with power. It supported work, not resistance. It fit office life. It could be branded as refinement. It never challenged institutional authority.

Nicotine, especially when used by working-class populations, became associated with stress relief, nonconformity, and failure to comply. That symbolism persisted long after the smoke could be removed.

Addictions are not judged by chemistry. They are judged by who uses them and whether they fit prevailing moral narratives.

Coffee passed the test. Nicotine did not.

The Core Error

The central mistake is confusing a molecule with a method. Nicotine did not cause the smoking epidemic. Combustion did. Once that distinction is restored, much of modern tobacco policy looks incoherent. Low-risk behaviors are treated as moral threats, while higher-risk behaviors are tolerated because they are culturally embedded.

This is not science. It is politics dressed up as health.

A Final Thought

If we applied the standards used against nicotine to caffeine, coffee would be regulated like a controlled substance. If we applied the standards used for caffeine to nicotine, pouches and vaping would be treated as unremarkable adult choices.

The rational approach is obvious: evaluate substances based on dose, delivery, and actual harm. Stop moralizing chemistry. Stop pretending that all addictions are equal. Nicotine is not harmless. Neither is caffeine. But both are far safer than the stories told about them.

This essay only scratches the surface. The strange moral history of nicotine, caffeine, and acceptable addiction exposes a much larger problem: modern institutions have forgotten how to reason about risk.

Brownstone Institute

The Unmasking of Vaccine Science

From the Brownstone Institute

By

I recently purchased Aaron Siri’s new book Vaccines, Amen. As I flipped though the pages, I noticed a section devoted to his now-famous deposition of Dr Stanley Plotkin, the “godfather” of vaccines.

I’d seen viral clips circulating on social media, but I had never taken the time to read the full transcript — until now.

Siri’s interrogation was methodical and unflinching…a masterclass in extracting uncomfortable truths.

A Legal Showdown

In January 2018, Dr Stanley Plotkin, a towering figure in immunology and co-developer of the rubella vaccine, was deposed under oath in Pennsylvania by attorney Aaron Siri.

The case stemmed from a custody dispute in Michigan, where divorced parents disagreed over whether their daughter should be vaccinated. Plotkin had agreed to testify in support of vaccination on behalf of the father.

What followed over the next nine hours, captured in a 400-page transcript, was extraordinary.

Plotkin’s testimony revealed ethical blind spots, scientific hubris, and a troubling indifference to vaccine safety data.

He mocked religious objectors, defended experiments on mentally disabled children, and dismissed glaring weaknesses in vaccine surveillance systems.

A System Built on Conflicts

From the outset, Plotkin admitted to a web of industry entanglements.

He confirmed receiving payments from Merck, Sanofi, GSK, Pfizer, and several biotech firms. These were not occasional consultancies but long-standing financial relationships with the very manufacturers of the vaccines he promoted.

Plotkin appeared taken aback when Siri questioned his financial windfall from royalties on products like RotaTeq, and expressed surprise at the “tone” of the deposition.

Siri pressed on: “You didn’t anticipate that your financial dealings with those companies would be relevant?”

Plotkin replied: “I guess, no, I did not perceive that that was relevant to my opinion as to whether a child should receive vaccines.”

The man entrusted with shaping national vaccine policy had a direct financial stake in its expansion, yet he brushed it aside as irrelevant.

Contempt for Religious Dissent

Siri questioned Plotkin on his past statements, including one in which he described vaccine critics as “religious zealots who believe that the will of God includes death and disease.”

Siri asked whether he stood by that statement. Plotkin replied emphatically, “I absolutely do.”

Plotkin was not interested in ethical pluralism or accommodating divergent moral frameworks. For him, public health was a war, and religious objectors were the enemy.

He also admitted to using human foetal cells in vaccine production — specifically WI-38, a cell line derived from an aborted foetus at three months’ gestation.

Siri asked if Plotkin had authored papers involving dozens of abortions for tissue collection. Plotkin shrugged: “I don’t remember the exact number…but quite a few.”

Plotkin regarded this as a scientific necessity, though for many people — including Catholics and Orthodox Jews — it remains a profound moral concern.

Rather than acknowledging such sensitivities, Plotkin dismissed them outright, rejecting the idea that faith-based values should influence public health policy.

That kind of absolutism, where scientific aims override moral boundaries, has since drawn criticism from ethicists and public health leaders alike.

As NIH director Jay Bhattacharya later observed during his 2025 Senate confirmation hearing, such absolutism erodes trust.

“In public health, we need to make sure the products of science are ethically acceptable to everybody,” he said. “Having alternatives that are not ethically conflicted with foetal cell lines is not just an ethical issue — it’s a public health issue.”

Safety Assumed, Not Proven

When the discussion turned to safety, Siri asked, “Are you aware of any study that compares vaccinated children to completely unvaccinated children?”

Plotkin replied that he was “not aware of well-controlled studies.”

Asked why no placebo-controlled trials had been conducted on routine childhood vaccines such as hepatitis B, Plotkin said such trials would be “ethically difficult.”

That rationale, Siri noted, creates a scientific blind spot. If trials are deemed too unethical to conduct, then gold-standard safety data — the kind required for other pharmaceuticals — simply do not exist for the full childhood vaccine schedule.

Siri pointed to one example: Merck’s hepatitis B vaccine, administered to newborns. The company had only monitored participants for adverse events for five days after injection.

Plotkin didn’t dispute it. “Five days is certainly short for follow-up,” he admitted, but claimed that “most serious events” would occur within that time frame.

Siri challenged the idea that such a narrow window could capture meaningful safety data — especially when autoimmune or neurodevelopmental effects could take weeks or months to emerge.

Siri pushed on. He asked Plotkin if the DTaP and Tdap vaccines — for diphtheria, tetanus and pertussis — could cause autism.

“I feel confident they do not,” Plotkin replied.

But when shown the Institute of Medicine’s 2011 report, which found the evidence “inadequate to accept or reject” a causal link between DTaP and autism, Plotkin countered, “Yes, but the point is that there were no studies showing that it does cause autism.”

In that moment, Plotkin embraced a fallacy: treating the absence of evidence as evidence of absence.

“You’re making assumptions, Dr Plotkin,” Siri challenged. “It would be a bit premature to make the unequivocal, sweeping statement that vaccines do not cause autism, correct?”

Plotkin relented. “As a scientist, I would say that I do not have evidence one way or the other.”

The MMR

The deposition also exposed the fragile foundations of the measles, mumps, and rubella (MMR) vaccine.

When Siri asked for evidence of randomised, placebo-controlled trials conducted before MMR’s licensing, Plotkin pushed back: “To say that it hasn’t been tested is absolute nonsense,” he said, claiming it had been studied “extensively.”

Pressed to cite a specific trial, Plotkin couldn’t name one. Instead, he gestured to his own 1,800-page textbook: “You can find them in this book, if you wish.”

Siri replied that he wanted an actual peer-reviewed study, not a reference to Plotkin’s own book. “So you’re not willing to provide them?” he asked. “You want us to just take your word for it?”

Plotkin became visibly frustrated.

Eventually, he conceded there wasn’t a single randomised, placebo-controlled trial. “I don’t remember there being a control group for the studies, I’m recalling,” he said.

The exchange foreshadowed a broader shift in public discourse, highlighting long-standing concerns that some combination vaccines were effectively grandfathered into the schedule without adequate safety testing.

In September this year, President Trump called for the MMR vaccine to be broken up into three separate injections.

The proposal echoed a view that Andrew Wakefield had voiced decades earlier — namely, that combining all three viruses into a single shot might pose greater risk than spacing them out.

Wakefield was vilified and struck from the medical register. But now, that same question — once branded as dangerous misinformation — is set to be re-examined by the CDC’s new vaccine advisory committee, chaired by Martin Kulldorff.

The Aluminium Adjuvant Blind Spot

Siri next turned to aluminium adjuvants — the immune-activating agents used in many childhood vaccines.

When asked whether studies had compared animals injected with aluminium to those given saline, Plotkin conceded that research on their safety was limited.

Siri pressed further, asking if aluminium injected into the body could travel to the brain. Plotkin replied, “I have not seen such studies, no, or not read such studies.”

When presented with a series of papers showing that aluminium can migrate to the brain, Plotkin admitted he had not studied the issue himself, acknowledging that there were experiments “suggesting that that is possible.”

Asked whether aluminium might disrupt neurological development in children, Plotkin stated, “I’m not aware that there is evidence that aluminum disrupts the developmental processes in susceptible children.”

Taken together, these exchanges revealed a striking gap in the evidence base.

Compounds such as aluminium hydroxide and aluminium phosphate have been injected into babies for decades, yet no rigorous studies have ever evaluated their neurotoxicity against an inert placebo.

This issue returned to the spotlight in September 2025, when President Trump pledged to remove aluminium from vaccines, and world-leading researcher Dr Christopher Exley renewed calls for its complete reassessment.

A Broken Safety Net

Siri then turned to the reliability of the Vaccine Adverse Event Reporting System (VAERS) — the primary mechanism for collecting reports of vaccine-related injuries in the United States.

Did Plotkin believe most adverse events were captured in this database?

“I think…probably most are reported,” he replied.

But Siri showed him a government-commissioned study by Harvard Pilgrim, which found that fewer than 1% of vaccine adverse events are reported to VAERS.

“Yes,” Plotkin said, backtracking. “I don’t really put much faith into the VAERS system…”

Yet this is the same database officials routinely cite to claim that “vaccines are safe.”

Ironically, Plotkin himself recently co-authored a provocative editorial in the New England Journal of Medicine, conceding that vaccine safety monitoring remains grossly “inadequate.”

Experimenting on the Vulnerable

Perhaps the most chilling part of the deposition concerned Plotkin’s history of human experimentation.

“Have you ever used orphans to study an experimental vaccine?” Siri asked.

“Yes,” Plotkin replied.

“Have you ever used the mentally handicapped to study an experimental vaccine?” Siri asked.

“I don’t recollect…I wouldn’t deny that I may have done so,” Plotkin replied.

Siri cited a study conducted by Plotkin in which he had administered experimental rubella vaccines to institutionalised children who were “mentally retarded.”

Plotkin stated flippantly, “Okay well, in that case…that’s what I did.”

There was no apology, no sign of ethical reflection — just matter-of-fact acceptance.

Siri wasn’t done.

He asked if Plotkin had argued that it was better to test on those “who are human in form but not in social potential” rather than on healthy children.

Plotkin admitted to writing it.

Siri established that Plotkin had also conducted vaccine research on the babies of imprisoned mothers, and on colonised African populations.

Plotkin appeared to suggest that the scientific value of such studies outweighed the ethical lapses—an attitude that many would interpret as the classic ‘ends justify the means’ rationale.

But that logic fails the most basic test of informed consent. Siri asked whether consent had been obtained in these cases.

“I don’t remember…but I assume it was,” Plotkin said.

Assume?

This was post-Nuremberg research. And the leading vaccine developer in America couldn’t say for sure whether he had properly informed the people he experimented on.

In any other field of medicine, such lapses would be disqualifying.

A Casual Dismissal of Parental Rights

Plotkin’s indifference to experimenting on disabled children didn’t stop there.

Siri asked whether someone who declined a vaccine due to concerns about missing safety data should be labelled “anti-vax.”

Plotkin replied, “If they refused to be vaccinated themselves or refused to have their children vaccinated, I would call them an anti-vaccination person, yes.”

Plotkin was less concerned about adults making that choice for themselves, but he had no tolerance for parents making those choices for their own children.

“The situation for children is quite different,” said Plotkin, “because one is making a decision for somebody else and also making a decision that has important implications for public health.”

In Plotkin’s view, the state held greater authority than parents over a child’s medical decisions — even when the science was uncertain.

The Enabling of Figures Like Plotkin

The Plotkin deposition stands as a case study in how conflicts of interest, ideology, and deference to authority have corroded the scientific foundations of public health.

Plotkin is no fringe figure. He is celebrated, honoured, and revered. Yet he promotes vaccines that have never undergone true placebo-controlled testing, shrugs off the failures of post-market surveillance, and admits to experimenting on vulnerable populations.

This is not conjecture or conspiracy — it is sworn testimony from the man who helped build the modern vaccine program.

Now, as Health Secretary Robert F. Kennedy, Jr. reopens long-dismissed questions about aluminium adjuvants and the absence of long-term safety studies, Plotkin’s once-untouchable legacy is beginning to fray.

Republished from the author’s Substack

-

International1 day ago

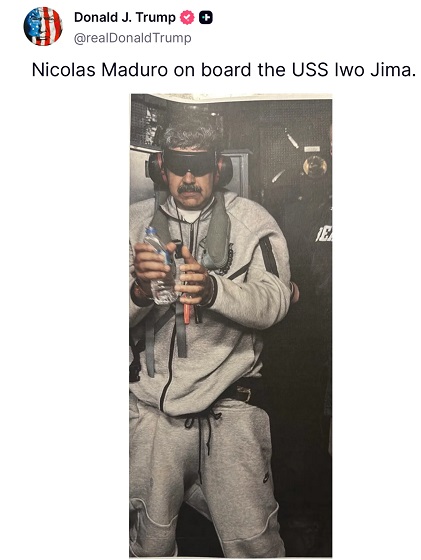

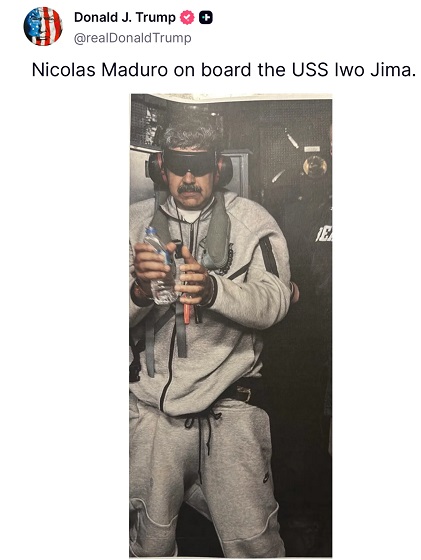

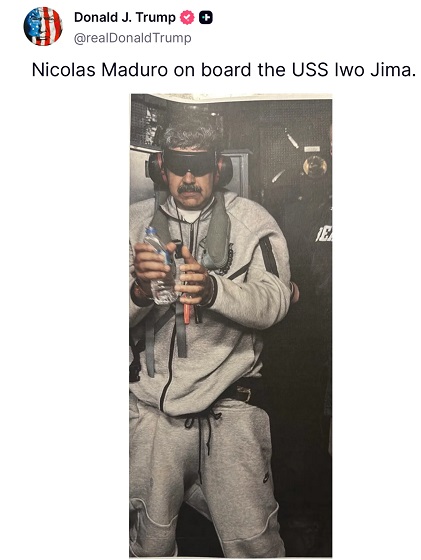

International1 day ago“Captured and flown out”: Trump announces dramatic capture of Maduro

-

International1 day ago

International1 day agoTrump Says U.S. Strike Captured Nicolás Maduro and Wife Cilia Flores; Bondi Says Couple Possessed Machine Guns

-

Energy1 day ago

Energy1 day agoThe U.S. Just Removed a Dictator and Canada is Collateral Damage

-

International1 day ago

International1 day agoUS Justice Department Accusing Maduro’s Inner Circle of a Narco-State Conspiracy

-

Haultain Research1 day ago

Haultain Research1 day agoTrying to Defend Maduro’s Legitimacy

-

Business1 day ago

Business1 day agoVacant Somali Daycares In Viral Videos Are Also Linked To $300 Million ‘Feeding Our Future’ Fraud

-

Daily Caller18 hours ago

Daily Caller18 hours agoTrump Says US Going To Run Venezuela After Nabbing Maduro

-

International1 day ago

International1 day agoU.S. Claims Western Hemispheric Domination, Denies Russia Security Interests On Its Own Border